ECS

Elastic Container Service is a managed service which allows you to run containers.

You start to work with ECS by creating a logical entity called cluster.

It's a place where you can run your containers.

Then you can run tasks or services on the cluster.

Exact procedure to create the cluster is out of scope of this guide. Please refer to the official documentation for that.

ECS basics¶

Below is a very condensed overview of ECS concepts. Just to introduce you to the terminology.

To run any container on a cluster you have first to create a task definition.

This is a blueprint for your container(s) that you want to run.

It includes information about the container image, CPU and memory requirements, networking and other parameters.

Basing on the task definition, you can run tasks or services on the cluster.

Task is more like a one-time job, while service is more suitable for a long-running task.

Service also provides extra capabilities like load balancing and auto-scaling.

Tasks and services are logical entities.

But actual execution must be done by some resources.

Two main resources that ECS can use are Fargate and EC2.

Fargate is a serverless approach.

EC2 is a more traditional way where you have to manage the underlying infrastructure yourself.

Easiest way to run containers on ECS is to use Fargate. But it's also the most expensive. You pay an extra fee for the convenience of being serverless.

To run on EC2 you have to register instances with ECS first.

The most smooth way to do that is to create capacity provider and associate it with the cluster.

Capacity provider is yet another logical entity.

It defines EC2 resources that ECS tasks run on.

As part of its definition, you specify an auto scaling group which manages instances creation and termination.

Demo infrastructure

In this guide we will run task and use ec2 based capacity provider.

Task Definition¶

Our task definition will be very simple.

We run single all-inclusive Datero container.

What we need to specify is image url, required CPU & RAM resources and port mapping.

To have an access to the web application we expose port 80.

For the database access, you have to expose port 5432 as well.

Our main purpose is to show how to run Datero on ECS, so we will expose only web application port.

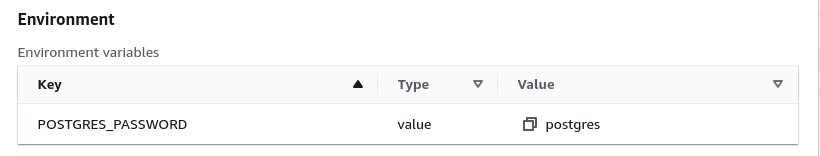

There is also a requirement to specify POSTGRES_PASSWORD environment variable.

It's dictated by the official image of underlying postgres database.

You could use whatever value you want, but for the demo purposes we will use postgres as a password.

Image URL¶

Datero is gonna be available soon on AWS marketplace. To use it, you have to subscribe to the product and then you can use the image url from the AWS ECR.

AWS Marketplace

The latest version of Datero at the time of writing is 1.0.2.

To use other version, just replace 1.0.2 with the desired version number.

You can check the available versions on the AWS Marketplace .

Alternatively, Datero image is available on Docker Hub .

Support modes

Datero product is free, but by default it comes with no support. Our team provides paid support plans. Details on available plans could be found on our website .

As a benefit, if you install Datero from AWS Marketplace , you get a free support for 30 days. This is a great way to test Datero and have a help from the team if needed.

Run Task¶

Once task definition is created, you can run the task on the cluster.

Open created task definition and click on the

Once task definition is created, you can run the task on the cluster.

Open created task definition and click on the Run Task button.

You will see a plenty of options to specify.

As a minimum, you have to select the cluster and capacity provider strategy.

Because we created ec2 based capacity provider, we will use it.

We also specify 1 as the number of tasks to run.

Access Datero UI¶

ECS cluster will notify capacity provider to start new EC2 instance if there is not enough capacity.

Once the instance is up and running, the task will be scheduled on it.

If everything goes well, you will see the task in Running state.

You can access Datero web application by clicking on the Public IP link in the Configuration section.

Networking setup

In the screenshot above task is running in host networking mode.

It's not the best practice for the production environment.

But for the demo purposes it's fine.

AWS advises to use awsvpc networking mode.

It results into absence of public IP address for the task and separate ENI for each task.

You have to access it over ec2 private IP address from within your AWS environment.

Or by IPv6 address if you have enabled IPv6 connectivity on the VPC level.

Datero is configured to listen on both IPv4 and IPv6 addresses. For an example of how to serve Datero over IPv6, see IPv6 Support.

Congratulations! You have successfully installed Datero on AWS ECS.

Next steps¶

You might find it useful to check the Overview section. It will make you familiar with what you will get after the installation.

More details on initial setup could be found in Installation section.

For complete use-case example, please go to the Tutorial section.

For individual datasources configuration, please refer to Connectors section.